ChatGPT 学术优化

""" @@ -105,8 +105,7 @@ def auto_opentab_delay(): def open(): time.sleep(2) webbrowser.open_new_tab(f'http://localhost:{PORT}') - t = threading.Thread(target=open) - t.daemon = True; t.start() + threading.Thread(target=open, name="open-browser", daemon=True).start() auto_opentab_delay() demo.title = "ChatGPT 学术优化" diff --git a/toolbox.py b/toolbox.py index d96b3f61..326740ab 100644 --- a/toolbox.py +++ b/toolbox.py @@ -226,13 +226,10 @@ def get_conf(*args): except: r = getattr(importlib.import_module('config'), arg) res.append(r) # 在读取API_KEY时,检查一下是不是忘了改config - if arg=='API_KEY' and len(r) != 51: - assert False, "正确的API_KEY密钥是51位,请在config文件中修改API密钥, 添加海外代理之后再运行。" + \ + assert arg != 'API_KEY' or len(r) == 51, "正确的API_KEY密钥是51位,请在config文件中修改API密钥, 添加海外代理之后再运行。" \ "(如果您刚更新过代码,请确保旧版config_private文件中没有遗留任何新增键值)" return res def clear_line_break(txt): - txt = txt.replace('\n', ' ') - txt = txt.replace(' ', ' ') - txt = txt.replace(' ', ' ') - return txt \ No newline at end of file + import re + return re.sub(r"\s+", " ", txt) \ No newline at end of file From 808e23c98af2e88a0259ae84001ed31a3e5fb990 Mon Sep 17 00:00:00 2001 From: Jia Xinglong'

+ suf = '

'

if ('$' in txt) and ('```' not in txt):

- return markdown.markdown(txt,extensions=['fenced_code','tables']) + '' + \ - markdown.markdown(convert_math(txt, splitParagraphs=False),extensions=['fenced_code','tables']) + return pre + markdown.markdown(txt,extensions=['fenced_code','tables']) + '

' + markdown.markdown(convert_math(txt, splitParagraphs=False),extensions=['fenced_code','tables']) + suf else: - return markdown.markdown(txt,extensions=['fenced_code','tables']) + return pre + markdown.markdown(txt,extensions=['fenced_code','tables']) + suf def format_io(self, y): From 01931b0bd2f95209133e861c05ba83be29dc7e06 Mon Sep 17 00:00:00 2001 From: Your Name

ChatGPT 学术优化

""" @@ -57,25 +57,27 @@ with gr.Blocks(theme=set_theme, analytics_enabled=False) as demo: with gr.Row(): from check_proxy import check_proxy statusDisplay = gr.Markdown(f"Tip: 按Enter提交, 按Shift+Enter换行。当前模型: {LLM_MODEL} \n {check_proxy(proxies)}") - with gr.Row(): - for k in functional: - variant = functional[k]["Color"] if "Color" in functional[k] else "secondary" - functional[k]["Button"] = gr.Button(k, variant=variant) - with gr.Row(): - gr.Markdown("注意:以下“红颜色”标识的函数插件需从input区读取路径作为参数.") - with gr.Row(): - for k in crazy_functional: - variant = crazy_functional[k]["Color"] if "Color" in crazy_functional[k] else "secondary" - crazy_functional[k]["Button"] = gr.Button(k, variant=variant) - with gr.Row(): - gr.Markdown("上传本地文件,供上面的函数插件调用.") - with gr.Row(): - file_upload = gr.Files(label='任何文件, 但推荐上传压缩文件(zip, tar)', file_count="multiple") - system_prompt = gr.Textbox(show_label=True, placeholder=f"System Prompt", label="System prompt", value=initial_prompt).style(container=True) - with gr.Accordion("arguments", open=False): + with gr.Accordion("基础功能区", open=True): + with gr.Row(): + for k in functional: + variant = functional[k]["Color"] if "Color" in functional[k] else "secondary" + functional[k]["Button"] = gr.Button(k, variant=variant) + with gr.Accordion("函数插件区", open=True): + with gr.Row(): + gr.Markdown("注意:以下“红颜色”标识的函数插件需从input区读取路径作为参数.") + with gr.Row(): + for k in crazy_functional: + variant = crazy_functional[k]["Color"] if "Color" in crazy_functional[k] else "secondary" + crazy_functional[k]["Button"] = gr.Button(k, variant=variant) + with gr.Row(): + with gr.Accordion("展开“文件上传区”。上传本地文件供“红颜色”的函数插件调用。", open=False): + file_upload = gr.Files(label='任何文件, 但推荐上传压缩文件(zip, tar)', file_count="multiple") + with gr.Accordion("展开SysPrompt & GPT参数 & 交互界面布局", open=False): + system_prompt = gr.Textbox(show_label=True, placeholder=f"System Prompt", label="System prompt", value=initial_prompt) top_p = gr.Slider(minimum=-0, maximum=1.0, value=1.0, step=0.01,interactive=True, label="Top-p (nucleus sampling)",) temperature = gr.Slider(minimum=-0, maximum=2.0, value=1.0, step=0.01, interactive=True, label="Temperature",) - + checkboxes = gr.CheckboxGroup(["基础功能区", "函数插件区", "文件上传区"], value=["USA", "Japan", "Pakistan"], + label="显示功能区") predict_args = dict(fn=predict, inputs=[txt, top_p, temperature, chatbot, history, system_prompt], outputs=[chatbot, history, statusDisplay], show_progress=True) empty_txt_args = dict(fn=lambda: "", inputs=[], outputs=[txt]) # 用于在提交后清空输入栏 @@ -105,8 +107,7 @@ def auto_opentab_delay(): def open(): time.sleep(2) webbrowser.open_new_tab(f'http://localhost:{PORT}') - t = threading.Thread(target=open) - t.daemon = True; t.start() + threading.Thread(target=open, name="open-browser", daemon=True).start() auto_opentab_delay() demo.title = "ChatGPT 学术优化" From 17a18e99fa84f519e8ea6c85cc3df0bca83911c5 Mon Sep 17 00:00:00 2001 From: Your NameChatGPT 学术优化

""" # 问询记录, python 版本建议3.9+(越新越好) import logging -os.makedirs('gpt_log', exist_ok=True) -try:logging.basicConfig(filename='gpt_log/chat_secrets.log', level=logging.INFO, encoding='utf-8') -except:logging.basicConfig(filename='gpt_log/chat_secrets.log', level=logging.INFO) -print('所有问询记录将自动保存在本地目录./gpt_log/chat_secrets.log, 请注意自我隐私保护哦!') +os.makedirs("gpt_log", exist_ok=True) +try:logging.basicConfig(filename="gpt_log/chat_secrets.log", level=logging.INFO, encoding="utf-8") +except:logging.basicConfig(filename="gpt_log/chat_secrets.log", level=logging.INFO) +print("所有问询记录将自动保存在本地目录./gpt_log/chat_secrets.log, 请注意自我隐私保护哦!") # 一些普通功能模块 from functional import get_functionals functional = get_functionals() -# 对一些丧心病狂的实验性功能模块进行测试 +# 高级函数插件 from functional_crazy import get_crazy_functionals -crazy_functional = get_crazy_functionals() +crazy_fns = get_crazy_functionals() # 处理markdown文本格式的转变 gr.Chatbot.postprocess = format_io @@ -40,11 +39,10 @@ set_theme = adjust_theme() cancel_handles = [] with gr.Blocks(theme=set_theme, analytics_enabled=False) as demo: gr.HTML(title_html) - with gr.Row(): + with gr.Row().style(equal_height=True): with gr.Column(scale=2): chatbot = gr.Chatbot() - chatbot.style(height=1150) - chatbot.style() + chatbot.style(height=CHATBOT_HEIGHT) history = gr.State([]) with gr.Column(scale=1): with gr.Row(): @@ -66,49 +64,70 @@ with gr.Blocks(theme=set_theme, analytics_enabled=False) as demo: with gr.Row(): gr.Markdown("注意:以下“红颜色”标识的函数插件需从input区读取路径作为参数.") with gr.Row(): - for k in crazy_functional: - variant = crazy_functional[k]["Color"] if "Color" in crazy_functional[k] else "secondary" - crazy_functional[k]["Button"] = gr.Button(k, variant=variant) + for k in crazy_fns: + if not crazy_fns[k].get("AsButton", True): continue + variant = crazy_fns[k]["Color"] if "Color" in crazy_fns[k] else "secondary" + crazy_fns[k]["Button"] = gr.Button(k, variant=variant) with gr.Row(): - with gr.Accordion("展开“文件上传区”。上传本地文件供“红颜色”的函数插件调用。", open=False): - file_upload = gr.Files(label='任何文件, 但推荐上传压缩文件(zip, tar)', file_count="multiple") + with gr.Accordion("更多函数插件", open=True): + dropdown_fn_list = [k for k in crazy_fns.keys() if not crazy_fns[k].get("AsButton", True)] + with gr.Column(scale=1): + dropdown = gr.Dropdown(dropdown_fn_list, value=r"打开插件列表", label="").style(container=False) + with gr.Column(scale=1): + switchy_bt = gr.Button(r"请先从插件列表中选择", variant="secondary") + with gr.Row(): + with gr.Accordion("点击展开“文件上传区”。上传本地文件可供红色函数插件调用。", open=False) as area_file_up: + file_upload = gr.Files(label="任何文件, 但推荐上传压缩文件(zip, tar)", file_count="multiple") with gr.Accordion("展开SysPrompt & GPT参数 & 交互界面布局", open=False): system_prompt = gr.Textbox(show_label=True, placeholder=f"System Prompt", label="System prompt", value=initial_prompt) top_p = gr.Slider(minimum=-0, maximum=1.0, value=1.0, step=0.01,interactive=True, label="Top-p (nucleus sampling)",) temperature = gr.Slider(minimum=-0, maximum=2.0, value=1.0, step=0.01, interactive=True, label="Temperature",) - checkboxes = gr.CheckboxGroup(["基础功能区", "函数插件区"], - value=["基础功能区", "函数插件区"], label="显示哪些功能区") + checkboxes = gr.CheckboxGroup(["基础功能区", "函数插件区"], value=["基础功能区", "函数插件区"], label="显示/隐藏功能区") - def what_is_this(a): + # 功能区显示开关与功能区的互动 + def fn_area_visibility(a): ret = {} - # if area_basic_fn.visible != ("基础功能区" in a): - ret.update({area_basic_fn: gr.update(visible=("基础功能区" in a))}) - # if area_crazy_fn.visible != ("函数插件区" in a): - ret.update({area_crazy_fn: gr.update(visible=("函数插件区" in a))}) + ret.update({area_basic_fn: gr.update(visible=("基础功能区" in a))}) + ret.update({area_crazy_fn: gr.update(visible=("函数插件区" in a))}) return ret - - checkboxes.select(what_is_this, [checkboxes], [area_basic_fn, area_crazy_fn] ) - - predict_args = dict(fn=predict, inputs=[txt, top_p, temperature, chatbot, history, system_prompt], outputs=[chatbot, history, statusDisplay], show_progress=True) + checkboxes.select(fn_area_visibility, [checkboxes], [area_basic_fn, area_crazy_fn] ) + # 整理反复出现的控件句柄组合 + input_combo = [txt, top_p, temperature, chatbot, history, system_prompt] + output_combo = [chatbot, history, statusDisplay] + predict_args = dict(fn=predict, inputs=input_combo, outputs=output_combo, show_progress=True) empty_txt_args = dict(fn=lambda: "", inputs=[], outputs=[txt]) # 用于在提交后清空输入栏 - - cancel_handles.append(txt.submit(**predict_args)) - # txt.submit(**empty_txt_args) 在提交后清空输入栏 - cancel_handles.append(submitBtn.click(**predict_args)) - # submitBtn.click(**empty_txt_args) 在提交后清空输入栏 - resetBtn.click(lambda: ([], [], "已重置"), None, [chatbot, history, statusDisplay]) + # 提交按钮、重置按钮 + cancel_handles.append(txt.submit(**predict_args)) #; txt.submit(**empty_txt_args) 在提交后清空输入栏 + cancel_handles.append(submitBtn.click(**predict_args)) #; submitBtn.click(**empty_txt_args) 在提交后清空输入栏 + resetBtn.click(lambda: ([], [], "已重置"), None, output_combo) + # 基础功能区的回调函数注册 for k in functional: - click_handle = functional[k]["Button"].click(predict, - [txt, top_p, temperature, chatbot, history, system_prompt, gr.State(True), gr.State(k)], [chatbot, history, statusDisplay], show_progress=True) + click_handle = functional[k]["Button"].click(predict, [*input_combo, gr.State(True), gr.State(k)], output_combo, show_progress=True) cancel_handles.append(click_handle) + # 文件上传区,接收文件后与chatbot的互动 file_upload.upload(on_file_uploaded, [file_upload, chatbot, txt], [chatbot, txt]) - for k in crazy_functional: - click_handle = crazy_functional[k]["Button"].click(crazy_functional[k]["Function"], - [txt, top_p, temperature, chatbot, history, system_prompt, gr.State(PORT)], [chatbot, history, statusDisplay] - ) - try: click_handle.then(on_report_generated, [file_upload, chatbot], [file_upload, chatbot]) - except: pass + # 函数插件-固定按钮区 + for k in crazy_fns: + if not crazy_fns[k].get("AsButton", True): continue + click_handle = crazy_fns[k]["Button"].click(crazy_fns[k]["Function"], [*input_combo, gr.State(PORT)], output_combo) + click_handle.then(on_report_generated, [file_upload, chatbot], [file_upload, chatbot]) cancel_handles.append(click_handle) + # 函数插件-下拉菜单与随变按钮的互动 + def on_dropdown_changed(k): + variant = crazy_fns[k]["Color"] if "Color" in crazy_fns[k] else "secondary" + return {switchy_bt: gr.update(value=k, variant=variant)} + dropdown.select(on_dropdown_changed, [dropdown], [switchy_bt] ) + # 随变按钮的回调函数注册 + def route(k, *args, **kwargs): + if k in [r"打开插件列表", r"先从插件列表中选择"]: return + yield from crazy_fns[k]["Function"](*args, **kwargs) + click_handle = switchy_bt.click(route,[switchy_bt, *input_combo, gr.State(PORT)], output_combo) + click_handle.then(on_report_generated, [file_upload, chatbot], [file_upload, chatbot]) + def expand_file_area(file_upload, area_file_up): + if len(file_upload)>0: return {area_file_up: gr.update(open=True)} + click_handle.then(expand_file_area, [file_upload, area_file_up], [area_file_up]) + cancel_handles.append(click_handle) + # 终止按钮的回调函数注册 stopBtn.click(fn=None, inputs=None, outputs=None, cancels=cancel_handles) # gradio的inbrowser触发不太稳定,回滚代码到原始的浏览器打开函数 @@ -117,7 +136,7 @@ def auto_opentab_delay(): print(f"如果浏览器没有自动打开,请复制并转到以下URL: http://localhost:{PORT}") def open(): time.sleep(2) - webbrowser.open_new_tab(f'http://localhost:{PORT}') + webbrowser.open_new_tab(f"http://localhost:{PORT}") threading.Thread(target=open, name="open-browser", daemon=True).start() auto_opentab_delay() diff --git a/predict.py b/predict.py index 84036bc9..31a58613 100644 --- a/predict.py +++ b/predict.py @@ -96,13 +96,19 @@ def predict_no_ui_long_connection(inputs, top_p, temperature, history=[], sys_pr except StopIteration: break if len(chunk)==0: continue if not chunk.startswith('data:'): - chunk = get_full_error(chunk.encode('utf8'), stream_response) - raise ConnectionAbortedError("OpenAI拒绝了请求:" + chunk.decode()) - delta = json.loads(chunk.lstrip('data:'))['choices'][0]["delta"] + error_msg = get_full_error(chunk.encode('utf8'), stream_response).decode() + if "reduce the length" in error_msg: + raise ConnectionAbortedError("OpenAI拒绝了请求:" + error_msg) + else: + raise RuntimeError("OpenAI拒绝了请求:" + error_msg) + json_data = json.loads(chunk.lstrip('data:'))['choices'][0] + delta = json_data["delta"] if len(delta) == 0: break if "role" in delta: continue if "content" in delta: result += delta["content"]; print(delta["content"], end='') else: raise RuntimeError("意外Json结构:"+delta) + if json_data['finish_reason'] == 'length': + raise ConnectionAbortedError("正常结束,但显示Token不足。") return result diff --git a/toolbox.py b/toolbox.py index b78a5135..bf887602 100644 --- a/toolbox.py +++ b/toolbox.py @@ -2,21 +2,21 @@ import markdown, mdtex2html, threading, importlib, traceback, importlib, inspect from show_math import convert as convert_math from functools import wraps -def get_reduce_token_percent(e): +def get_reduce_token_percent(text): try: # text = "maximum context length is 4097 tokens. However, your messages resulted in 4870 tokens" pattern = r"(\d+)\s+tokens\b" match = re.findall(pattern, text) - eps = 50 # 稍微留一点余地, 确保下次别再超过token - max_limit = float(match[0]) - eps + EXCEED_ALLO = 500 # 稍微留一点余地,否则在回复时会因余量太少出问题 + max_limit = float(match[0]) - EXCEED_ALLO current_tokens = float(match[1]) ratio = max_limit/current_tokens assert ratio > 0 and ratio < 1 - return ratio + return ratio, str(int(current_tokens-max_limit)) except: - return 0.5 + return 0.5, '不详' -def predict_no_ui_but_counting_down(i_say, i_say_show_user, chatbot, top_p, temperature, history=[], sys_prompt='', long_connection=False): +def predict_no_ui_but_counting_down(i_say, i_say_show_user, chatbot, top_p, temperature, history=[], sys_prompt='', long_connection=True): """ 调用简单的predict_no_ui接口,但是依然保留了些许界面心跳功能,当对话太长时,会自动采用二分法截断 i_say: 当前输入 @@ -45,19 +45,18 @@ def predict_no_ui_but_counting_down(i_say, i_say_show_user, chatbot, top_p, temp break except ConnectionAbortedError as token_exceeded_error: # 尝试计算比例,尽可能多地保留文本 - p_ratio = get_reduce_token_percent(str(token_exceeded_error)) + p_ratio, n_exceed = get_reduce_token_percent(str(token_exceeded_error)) if len(history) > 0: history = [his[ int(len(his) *p_ratio): ] for his in history if his is not None] - mutable[1] = 'Warning! History conversation is too long, cut into half. ' else: i_say = i_say[: int(len(i_say) *p_ratio) ] - mutable[1] = 'Warning! Input file is too long, cut into half. ' + mutable[1] = f'警告,文本过长将进行截断,Token溢出数:{n_exceed},截断比例:{(1-p_ratio):.0%}。' except TimeoutError as e: - mutable[0] = '[Local Message] Failed with timeout.' + mutable[0] = '[Local Message] 请求超时。' raise TimeoutError except Exception as e: - mutable[0] = f'[Local Message] Failed with {str(e)}.' - raise RuntimeError(f'[Local Message] Failed with {str(e)}.') + mutable[0] = f'[Local Message] 异常:{str(e)}.' + raise RuntimeError(f'[Local Message] 异常:{str(e)}.') # 创建新线程发出http请求 thread_name = threading.Thread(target=mt, args=(i_say, history)); thread_name.start() # 原来的线程则负责持续更新UI,实现一个超时倒计时,并等待新线程的任务完成 @@ -286,7 +285,7 @@ def on_report_generated(files, chatbot): report_files = find_recent_files('gpt_log') if len(report_files) == 0: return report_files, chatbot # files.extend(report_files) - chatbot.append(['汇总报告如何远程获取?', '汇总报告已经添加到右侧文件上传区,请查收。']) + chatbot.append(['汇总报告如何远程获取?', '汇总报告已经添加到右侧“文件上传区”(可能处于折叠状态),请查收。']) return report_files, chatbot def get_conf(*args): From 639e24fc8227d484d198d24b331a6d7e98bafbb4 Mon Sep 17 00:00:00 2001 From: Your Name

- +

+ +

+

-

- 所有按钮都通过读取functional.py动态生成,可随意加自定义功能,解放粘贴板

+

+ +

+

From 6789eaee45efeeaa38a4c6592abff896a141b1ea Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sat, 1 Apr 2023 04:25:03 +0800

Subject: [PATCH 047/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 1ca58a03..69e39aa8 100644

--- a/README.md

+++ b/README.md

@@ -51,7 +51,7 @@ chat分析报告生成 | [实验性功能] 运行后自动生成总结汇报

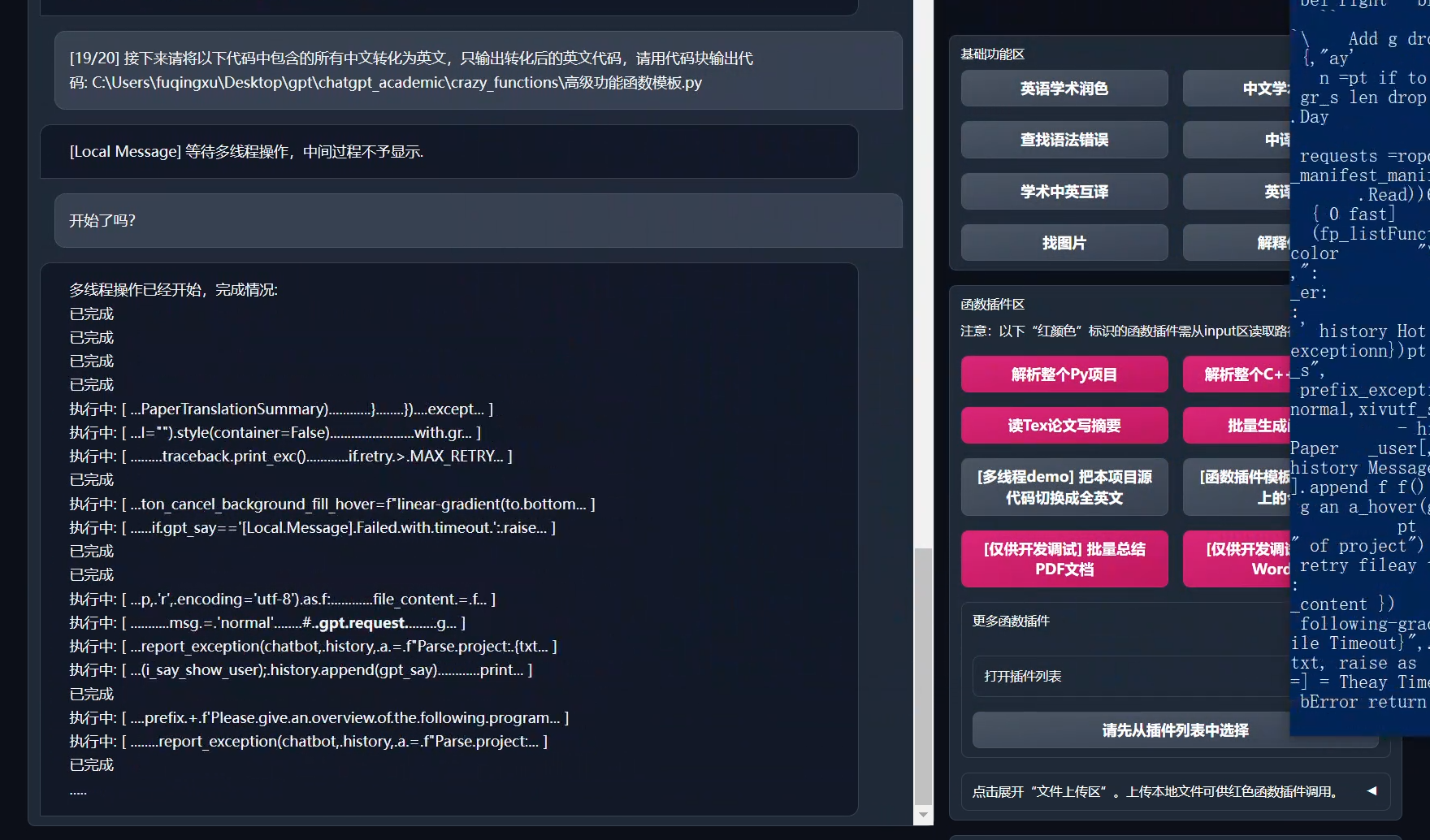

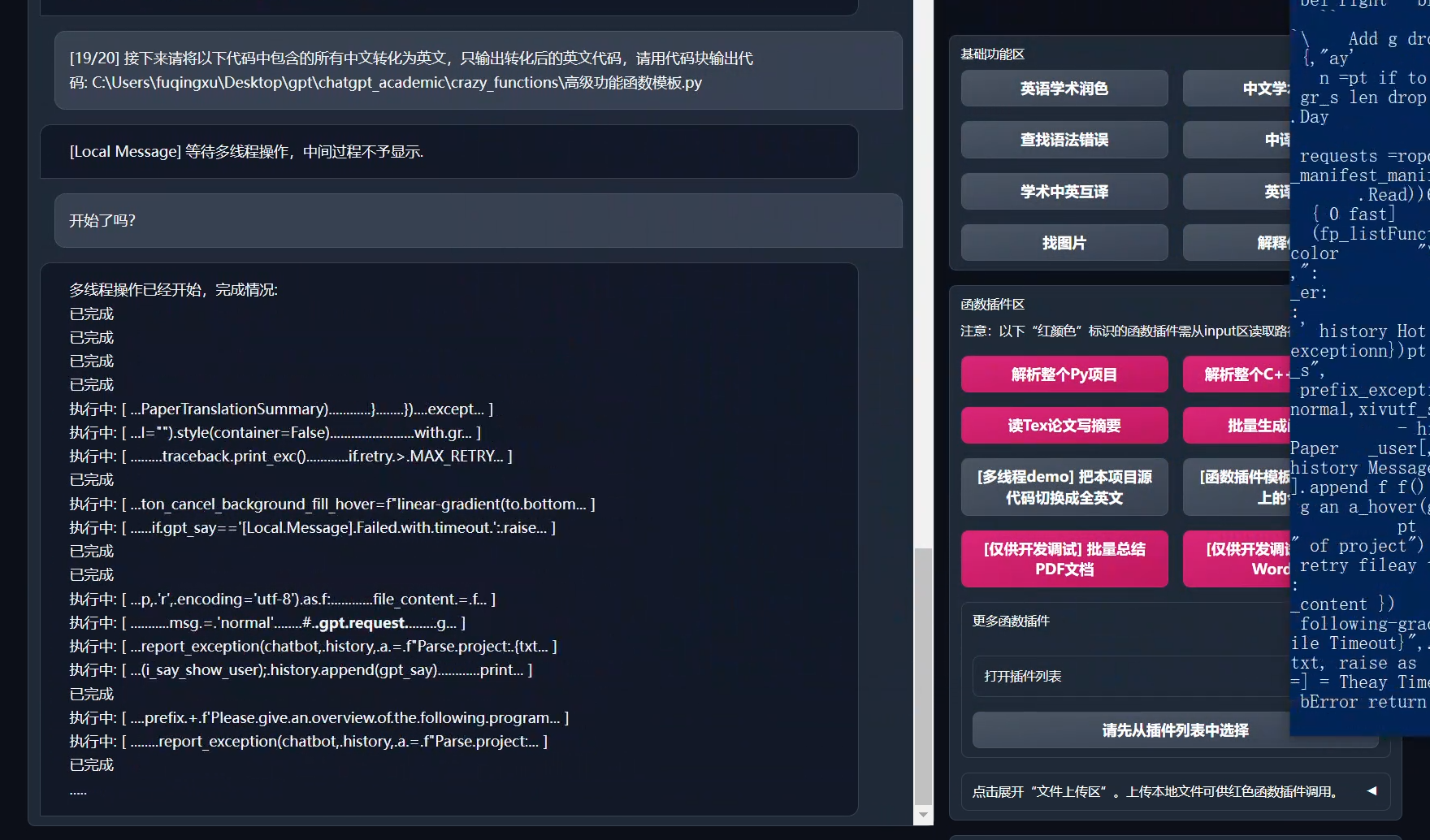

- 新界面(左:master主分支, 右:dev开发前沿)

From 6789eaee45efeeaa38a4c6592abff896a141b1ea Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sat, 1 Apr 2023 04:25:03 +0800

Subject: [PATCH 047/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 1ca58a03..69e39aa8 100644

--- a/README.md

+++ b/README.md

@@ -51,7 +51,7 @@ chat分析报告生成 | [实验性功能] 运行后自动生成总结汇报

- 新界面(左:master主分支, 右:dev开发前沿)

-

- +

+

-

From c9c9449a5903d4ded958ae807ce83ee79c165027 Mon Sep 17 00:00:00 2001

From: Your Name

- +

+

From 998e127b2f4f899476e93943ce89b11b1c6db768 Mon Sep 17 00:00:00 2001

From: Keldos  +

+

- +

+

From e35f7a7186db8b74ff9e5442d7821c8c98943122 Mon Sep 17 00:00:00 2001

From: Keldos  +

+

ChatGPT 学术优化

""" +title_html = f"{title}

" # 问询记录, python 版本建议3.9+(越新越好) import logging @@ -140,5 +141,5 @@ def auto_opentab_delay(): threading.Thread(target=open, name="open-browser", daemon=True).start() auto_opentab_delay() -demo.title = "ChatGPT 学术优化" +demo.title = title demo.queue(concurrency_count=CONCURRENT_COUNT).launch(server_name="0.0.0.0", share=True, server_port=PORT, auth=AUTHENTICATION) diff --git a/request_llm/bridge_tgui.py b/request_llm/bridge_tgui.py index 20a63521..d7cbe107 100644 --- a/request_llm/bridge_tgui.py +++ b/request_llm/bridge_tgui.py @@ -24,9 +24,9 @@ def random_hash(): letters = string.ascii_lowercase + string.digits return ''.join(random.choice(letters) for i in range(9)) -async def run(context): +async def run(context, max_token=512): params = { - 'max_new_tokens': 512, + 'max_new_tokens': max_token, 'do_sample': True, 'temperature': 0.5, 'top_p': 0.9, @@ -116,12 +116,15 @@ def predict_tgui(inputs, top_p, temperature, chatbot=[], history=[], system_prom prompt = inputs tgui_say = "" - mutable = [""] + mutable = ["", time.time()] def run_coorotine(mutable): async def get_result(mutable): async for response in run(prompt): print(response[len(mutable[0]):]) mutable[0] = response + if (time.time() - mutable[1]) > 3: + print('exit when no listener') + break asyncio.run(get_result(mutable)) thread_listen = threading.Thread(target=run_coorotine, args=(mutable,), daemon=True) @@ -129,6 +132,7 @@ def predict_tgui(inputs, top_p, temperature, chatbot=[], history=[], system_prom while thread_listen.is_alive(): time.sleep(1) + mutable[1] = time.time() # Print intermediate steps if tgui_say != mutable[0]: tgui_say = mutable[0] @@ -147,12 +151,17 @@ def predict_tgui_no_ui(inputs, top_p, temperature, history=[], sys_prompt=""): mutable = ["", time.time()] def run_coorotine(mutable): async def get_result(mutable): - async for response in run(prompt): + async for response in run(prompt, max_token=20): print(response[len(mutable[0]):]) mutable[0] = response + if (time.time() - mutable[1]) > 3: + print('exit when no listener') + break asyncio.run(get_result(mutable)) thread_listen = threading.Thread(target=run_coorotine, args=(mutable,)) thread_listen.start() - thread_listen.join() + while thread_listen.is_alive(): + time.sleep(1) + mutable[1] = time.time() tgui_say = mutable[0] return tgui_say From 9f07531a161e503b39c8d0cfe2127585e6548a0f Mon Sep 17 00:00:00 2001 From: Your Name{title}

" +title_html = """ChatGPT 学术优化

""" # 问询记录, python 版本建议3.9+(越新越好) import logging @@ -120,7 +119,7 @@ with gr.Blocks(theme=set_theme, analytics_enabled=False, css=advanced_css) as de dropdown.select(on_dropdown_changed, [dropdown], [switchy_bt] ) # 随变按钮的回调函数注册 def route(k, *args, **kwargs): - if k in [r"打开插件列表", r"先从插件列表中选择"]: return + if k in [r"打开插件列表", r"请先从插件列表中选择"]: return yield from crazy_fns[k]["Function"](*args, **kwargs) click_handle = switchy_bt.click(route,[switchy_bt, *input_combo, gr.State(PORT)], output_combo) click_handle.then(on_report_generated, [file_upload, chatbot], [file_upload, chatbot]) @@ -141,5 +140,5 @@ def auto_opentab_delay(): threading.Thread(target=open, name="open-browser", daemon=True).start() auto_opentab_delay() -demo.title = title +demo.title = "ChatGPT 学术优化" demo.queue(concurrency_count=CONCURRENT_COUNT).launch(server_name="0.0.0.0", share=True, server_port=PORT, auth=AUTHENTICATION) diff --git a/predict.py b/predict.py index 1310d3ff..31a58613 100644 --- a/predict.py +++ b/predict.py @@ -112,7 +112,8 @@ def predict_no_ui_long_connection(inputs, top_p, temperature, history=[], sys_pr return result -def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt='', stream = True, additional_fn=None): +def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt='', + stream = True, additional_fn=None): """ 发送至chatGPT,流式获取输出。 用于基础的对话功能。 @@ -243,11 +244,3 @@ def generate_payload(inputs, top_p, temperature, history, system_prompt, stream) return headers,payload -if not LLM_MODEL.startswith('gpt'): - # 函数重载到另一个文件 - from request_llm.bridge_tgui import predict_tgui, predict_tgui_no_ui - predict = predict_tgui - predict_no_ui = predict_tgui_no_ui - predict_no_ui_long_connection = predict_tgui_no_ui - - \ No newline at end of file From 19aba350a3d906f1f735ae6591f89fdfb52153d6 Mon Sep 17 00:00:00 2001 From: Your Name

@@ -204,6 +204,8 @@ input区域 输入 ./crazy_functions/test_project/python/dqn , 然后点击 "[

```

python check_proxy.py

```

+### 方法二:纯新手教程

+[纯新手教程](https://github.com/binary-husky/chatgpt_academic/wiki/%E4%BB%A3%E7%90%86%E8%BD%AF%E4%BB%B6%E9%97%AE%E9%A2%98%E7%9A%84%E6%96%B0%E6%89%8B%E8%A7%A3%E5%86%B3%E6%96%B9%E6%B3%95%EF%BC%88%E6%96%B9%E6%B3%95%E5%8F%AA%E9%80%82%E7%94%A8%E4%BA%8E%E6%96%B0%E6%89%8B%EF%BC%89)

## 兼容性测试

From 16de1812d3ed4af123545c8ab0c68584b3439ca9 Mon Sep 17 00:00:00 2001

From: Your Name

Date: Sun, 2 Apr 2023 20:02:47 +0800

Subject: [PATCH 076/154] =?UTF-8?q?=E5=BE=AE=E8=B0=83theme?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

theme.py | 18 +++++++++---------

1 file changed, 9 insertions(+), 9 deletions(-)

diff --git a/theme.py b/theme.py

index f5fd02a7..06d377de 100644

--- a/theme.py

+++ b/theme.py

@@ -107,20 +107,20 @@ advanced_css = """

/* 对话气泡 */

[class *= "message"] {

border-radius: var(--radius-xl) !important;

- padding: var(--spacing-xl) !important;

- font-size: var(--text-md) !important;

- line-height: var(--line-md) !important;

- min-height: calc(var(--text-md)*var(--line-md) + 2*var(--spacing-xl));

- min-width: calc(var(--text-md)*var(--line-md) + 2*var(--spacing-xl));

+ /* padding: var(--spacing-xl) !important; */

+ /* font-size: var(--text-md) !important; */

+ /* line-height: var(--line-md) !important; */

+ /* min-height: calc(var(--text-md)*var(--line-md) + 2*var(--spacing-xl)); */

+ /* min-width: calc(var(--text-md)*var(--line-md) + 2*var(--spacing-xl)); */

}

[data-testid = "bot"] {

- max-width: 85%;

- width: auto !important;

+ max-width: 95%;

+ /* width: auto !important; */

border-bottom-left-radius: 0 !important;

}

[data-testid = "user"] {

- max-width: 85%;

- width: auto !important;

+ max-width: 100%;

+ /* width: auto !important; */

border-bottom-right-radius: 0 !important;

}

/* 行内代码 */

From 51b3f8adca83c93f1ecfcc2884521ea52c42cbc6 Mon Sep 17 00:00:00 2001

From: Your Name

Date: Sun, 2 Apr 2023 20:03:25 +0800

Subject: [PATCH 077/154] =?UTF-8?q?+=E5=BC=82=E5=B8=B8=E5=A4=84=E7=90=86?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

main.py | 7 ++++---

predict.py | 10 ++++++----

toolbox.py | 23 +++++++++++++++++++++--

3 files changed, 31 insertions(+), 9 deletions(-)

diff --git a/main.py b/main.py

index 3de76a7d..0f0fe921 100644

--- a/main.py

+++ b/main.py

@@ -12,7 +12,8 @@ PORT = find_free_port() if WEB_PORT <= 0 else WEB_PORT

if not AUTHENTICATION: AUTHENTICATION = None

initial_prompt = "Serve me as a writing and programming assistant."

-title_html = """

Date: Sun, 2 Apr 2023 20:18:58 +0800

Subject: [PATCH 078/154] return None instead of [] when no file is concluded

---

toolbox.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/toolbox.py b/toolbox.py

index 61faf7c7..f7f73e90 100644

--- a/toolbox.py

+++ b/toolbox.py

@@ -303,7 +303,7 @@ def on_file_uploaded(files, chatbot, txt):

def on_report_generated(files, chatbot):

from toolbox import find_recent_files

report_files = find_recent_files('gpt_log')

- if len(report_files) == 0: return report_files, chatbot

+ if len(report_files) == 0: return files, chatbot

# files.extend(report_files)

chatbot.append(['汇总报告如何远程获取?', '汇总报告已经添加到右侧“文件上传区”(可能处于折叠状态),请查收。'])

return report_files, chatbot

From 160b001befdb674813e661d07656c1074fcc23cc Mon Sep 17 00:00:00 2001

From: Your Name

Date: Sun, 2 Apr 2023 20:20:37 +0800

Subject: [PATCH 079/154] CHATBOT_HEIGHT - 1

---

config.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/config.py b/config.py

index f4e1bc8b..a3c634f1 100644

--- a/config.py

+++ b/config.py

@@ -22,7 +22,7 @@ else:

# [step 3]>> 以下配置可以优化体验,但大部分场合下并不需要修改

# 对话窗的高度

-CHATBOT_HEIGHT = 1116

+CHATBOT_HEIGHT = 1115

# 发送请求到OpenAI后,等待多久判定为超时

TIMEOUT_SECONDS = 25

From 9f91fca4d23b8c8b8045a71f17a6ba23a006231c Mon Sep 17 00:00:00 2001

From: Your Name

Date: Sun, 2 Apr 2023 20:22:11 +0800

Subject: [PATCH 080/154] remove verbose print

---

toolbox.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/toolbox.py b/toolbox.py

index f7f73e90..c55a48e5 100644

--- a/toolbox.py

+++ b/toolbox.py

@@ -176,7 +176,7 @@ def close_up_code_segment_during_stream(gpt_reply):

segments = gpt_reply.split('```')

n_mark = len(segments) - 1

if n_mark % 2 == 1:

- print('输出代码片段中!')

+ # print('输出代码片段中!')

return gpt_reply+'\n```'

else:

return gpt_reply

From 9a192fd4738ddeed46cc39a10d2a228d815351b6 Mon Sep 17 00:00:00 2001

From: Your Name

Date: Sun, 2 Apr 2023 20:35:09 +0800

Subject: [PATCH 081/154] #236

---

README.md | 4 +++-

theme.py | 19 +++++++++++++------

2 files changed, 16 insertions(+), 7 deletions(-)

diff --git a/README.md b/README.md

index 96a9cb8e..f670dc13 100644

--- a/README.md

+++ b/README.md

@@ -192,7 +192,7 @@ input区域 输入 ./crazy_functions/test_project/python/dqn , 然后点击 "[

如果你发明了更好用的学术快捷键,欢迎发issue或者pull requests!

## 配置代理

-

+### 方法一:常规方法

在```config.py```中修改端口与代理软件对应

From bb1e410cb4acb6bfb06545edd4eeab55b3350854 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Mon, 3 Apr 2023 01:47:49 +0800

Subject: [PATCH 097/154] Update README.md

---

README.md | 1 +

1 file changed, 1 insertion(+)

diff --git a/README.md b/README.md

index 1deff679..8a7e911e 100644

--- a/README.md

+++ b/README.md

@@ -46,6 +46,7 @@ arxiv小助手 | [函数插件] 输入arxiv文章url即可一键翻译摘要+下

图片显示 | 可以在markdown中显示图片

多线程函数插件支持 | 支持多线调用chatgpt,一键处理海量文本或程序

支持GPT输出的markdown表格 | 可以输出支持GPT的markdown表格

+启动暗色gradio主题 | 在浏览器url后面添加```/?__dark-theme=true```可以切换dark主题

huggingface免科学上网[在线体验](https://huggingface.co/spaces/qingxu98/gpt-academic) | 登陆huggingface后复制[此空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

…… | ……

From 69be335d22ac3008a8d17be23434936b30ac2aa4 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Mon, 3 Apr 2023 01:49:40 +0800

Subject: [PATCH 098/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 8a7e911e..7af0d0c9 100644

--- a/README.md

+++ b/README.md

@@ -46,7 +46,7 @@ arxiv小助手 | [函数插件] 输入arxiv文章url即可一键翻译摘要+下

图片显示 | 可以在markdown中显示图片

多线程函数插件支持 | 支持多线调用chatgpt,一键处理海量文本或程序

支持GPT输出的markdown表格 | 可以输出支持GPT的markdown表格

-启动暗色gradio主题 | 在浏览器url后面添加```/?__dark-theme=true```可以切换dark主题

+启动暗色gradio[主题](https://github.com/binary-husky/chatgpt_academic/issues/173) | 在浏览器url后面添加```/?__dark-theme=true```可以切换dark主题

huggingface免科学上网[在线体验](https://huggingface.co/spaces/qingxu98/gpt-academic) | 登陆huggingface后复制[此空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

…… | ……

From 69624c66d7efd02d1273397af9248348ba0d5593 Mon Sep 17 00:00:00 2001

From: qingxu fu <505030475@qq.com>

Date: Mon, 3 Apr 2023 09:32:01 +0800

Subject: [PATCH 099/154] update README

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 7af0d0c9..cf8dda34 100644

--- a/README.md

+++ b/README.md

@@ -115,7 +115,7 @@ python -m pip install -r requirements.txt

# (选择二.2)conda activate gptac_venv

# (选择二.3)python -m pip install -r requirements.txt

-# 备注:使用官方pip源或者阿里pip源,其他pip源(如清华pip)有可能出问题,临时换源方法:

+# 备注:使用官方pip源或者阿里pip源,其他pip源(如一些大学的pip)有可能出问题,临时换源方法:

# python -m pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

```

From 6b5bdbe98a882a726ec9710e5e94baa94d470ad6 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Mon, 3 Apr 2023 17:00:51 +0800

Subject: [PATCH 100/154] Update issue templates

---

.github/ISSUE_TEMPLATE/bug_report.md | 14 ++++++++++++++

.github/ISSUE_TEMPLATE/feature_request.md | 10 ++++++++++

2 files changed, 24 insertions(+)

create mode 100644 .github/ISSUE_TEMPLATE/bug_report.md

create mode 100644 .github/ISSUE_TEMPLATE/feature_request.md

diff --git a/.github/ISSUE_TEMPLATE/bug_report.md b/.github/ISSUE_TEMPLATE/bug_report.md

new file mode 100644

index 00000000..9ab88316

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/bug_report.md

@@ -0,0 +1,14 @@

+---

+name: Bug report

+about: Create a report to help us improve

+title: ''

+labels: ''

+assignees: ''

+

+---

+

+**Describe the bug 简述**

+

+**Screen Shot 截图**

+

+**Terminal Traceback 终端traceback(如果有)**

diff --git a/.github/ISSUE_TEMPLATE/feature_request.md b/.github/ISSUE_TEMPLATE/feature_request.md

new file mode 100644

index 00000000..e46a4c01

--- /dev/null

+++ b/.github/ISSUE_TEMPLATE/feature_request.md

@@ -0,0 +1,10 @@

+---

+name: Feature request

+about: Suggest an idea for this project

+title: ''

+labels: ''

+assignees: ''

+

+---

+

+

From b5a48369a47de0d2c5eda433a95957e70fc516ff Mon Sep 17 00:00:00 2001

From: LiZheGuang <1030660726@qq.com>

Date: Mon, 3 Apr 2023 17:44:09 +0800

Subject: [PATCH 101/154] =?UTF-8?q?fix:=20=F0=9F=90=9B=20=E4=BF=AE?=

=?UTF-8?q?=E5=A4=8Dreact=E8=A7=A3=E6=9E=90=E9=A1=B9=E7=9B=AE=E4=B8=8D?=

=?UTF-8?q?=E6=98=BE=E7=A4=BA=E5=9C=A8=E4=B8=8B=E6=8B=89=E5=88=97=E8=A1=A8?=

=?UTF-8?q?=E7=9A=84=E9=97=AE=E9=A2=98?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

functional_crazy.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/functional_crazy.py b/functional_crazy.py

index 9c83b410..66a08db5 100644

--- a/functional_crazy.py

+++ b/functional_crazy.py

@@ -43,7 +43,7 @@ def get_crazy_functionals():

"AsButton": False, # 加入下拉菜单中

"Function": 解析一个Java项目

},

- "解析整个Java项目": {

+ "解析整个React项目": {

"Color": "stop", # 按钮颜色

"AsButton": False, # 加入下拉菜单中

"Function": 解析一个Rect项目

From aaf44750d9a0f876220f2159a1ca9d428a1f2fbd Mon Sep 17 00:00:00 2001

From: qingxu fu <505030475@qq.com>

Date: Mon, 3 Apr 2023 20:56:00 +0800

Subject: [PATCH 102/154] =?UTF-8?q?=E9=BB=98=E8=AE=A4=E6=9A=97=E8=89=B2?=

=?UTF-8?q?=E6=8A=A4=E7=9C=BC=E4=B8=BB=E9=A2=98?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

main.py | 6 ++++--

1 file changed, 4 insertions(+), 2 deletions(-)

diff --git a/main.py b/main.py

index 0f0fe921..973d16fb 100644

--- a/main.py

+++ b/main.py

@@ -134,10 +134,12 @@ with gr.Blocks(theme=set_theme, analytics_enabled=False, css=advanced_css) as de

# gradio的inbrowser触发不太稳定,回滚代码到原始的浏览器打开函数

def auto_opentab_delay():

import threading, webbrowser, time

- print(f"如果浏览器没有自动打开,请复制并转到以下URL: http://localhost:{PORT}")

+ print(f"如果浏览器没有自动打开,请复制并转到以下URL:")

+ print(f"\t(亮色主体): http://localhost:{PORT}")

+ print(f"\t(暗色主体): http://localhost:{PORT}/?__dark-theme=true")

def open():

time.sleep(2)

- webbrowser.open_new_tab(f"http://localhost:{PORT}")

+ webbrowser.open_new_tab(f"http://localhost:{PORT}/?__dark-theme=true")

threading.Thread(target=open, name="open-browser", daemon=True).start()

auto_opentab_delay()

From a4137e7170a5c4643c3a34c7299f5a6cfb449126 Mon Sep 17 00:00:00 2001

From: qingxu fu <505030475@qq.com>

Date: Tue, 4 Apr 2023 15:23:42 +0800

Subject: [PATCH 103/154] =?UTF-8?q?=E4=BF=AE=E5=A4=8D=E4=BB=A3=E7=A0=81?=

=?UTF-8?q?=E8=8B=B1=E6=96=87=E9=87=8D=E6=9E=84Bug?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

.gitignore | 3 +-

crazy_functions/代码重写为全英文_多线程.py | 135 +++++++++++++++++----

predict.py | 8 +-

requirements.txt | 2 +

4 files changed, 124 insertions(+), 24 deletions(-)

diff --git a/.gitignore b/.gitignore

index 36f35343..d2c0f963 100644

--- a/.gitignore

+++ b/.gitignore

@@ -139,4 +139,5 @@ config_private.py

gpt_log

private.md

private_upload

-other_llms

\ No newline at end of file

+other_llms

+cradle.py

\ No newline at end of file

diff --git a/crazy_functions/代码重写为全英文_多线程.py b/crazy_functions/代码重写为全英文_多线程.py

index 6c6b1c71..bfcbec3b 100644

--- a/crazy_functions/代码重写为全英文_多线程.py

+++ b/crazy_functions/代码重写为全英文_多线程.py

@@ -1,41 +1,126 @@

import threading

from predict import predict_no_ui_long_connection

-from toolbox import CatchException, write_results_to_file

+from toolbox import CatchException, write_results_to_file, report_execption

+def extract_code_block_carefully(txt):

+ splitted = txt.split('```')

+ n_code_block_seg = len(splitted) - 1

+ if n_code_block_seg <= 1: return txt

+ # 剩下的情况都开头除去 ``` 结尾除去一次 ```

+ txt_out = '```'.join(splitted[1:-1])

+ return txt_out

+

+def breakdown_txt_to_satisfy_token_limit(txt, limit, must_break_at_empty_line=True):

+ from transformers import GPT2TokenizerFast

+ tokenizer = GPT2TokenizerFast.from_pretrained("gpt2")

+ get_token_cnt = lambda txt: len(tokenizer(txt)["input_ids"])

+ def cut(txt_tocut, must_break_at_empty_line): # 递归

+ if get_token_cnt(txt_tocut) <= limit:

+ return [txt_tocut]

+ else:

+ lines = txt_tocut.split('\n')

+ estimated_line_cut = limit / get_token_cnt(txt_tocut) * len(lines)

+ estimated_line_cut = int(estimated_line_cut)

+ for cnt in reversed(range(estimated_line_cut)):

+ if must_break_at_empty_line:

+ if lines[cnt] != "": continue

+ print(cnt)

+ prev = "\n".join(lines[:cnt])

+ post = "\n".join(lines[cnt:])

+ if get_token_cnt(prev) < limit: break

+ if cnt == 0:

+ print('what the f?')

+ raise RuntimeError("存在一行极长的文本!")

+ print(len(post))

+ # 列表递归接龙

+ result = [prev]

+ result.extend(cut(post, must_break_at_empty_line))

+ return result

+ try:

+ return cut(txt, must_break_at_empty_line=True)

+ except RuntimeError:

+ return cut(txt, must_break_at_empty_line=False)

+

+

+def break_txt_into_half_at_some_linebreak(txt):

+ lines = txt.split('\n')

+ n_lines = len(lines)

+ pre = lines[:(n_lines//2)]

+ post = lines[(n_lines//2):]

+ return "\n".join(pre), "\n".join(post)

@CatchException

def 全项目切换英文(txt, top_p, temperature, chatbot, history, sys_prompt, WEB_PORT):

- history = [] # 清空历史,以免输入溢出

- # 集合文件

- import time, glob, os

+ # 第1步:清空历史,以免输入溢出

+ history = []

+

+ # 第2步:尝试导入依赖,如果缺少依赖,则给出安装建议

+ try:

+ import openai, transformers

+ except:

+ report_execption(chatbot, history,

+ a = f"解析项目: {txt}",

+ b = f"导入软件依赖失败。使用该模块需要额外依赖,安装方法```pip install --upgrade openai transformers```。")

+ yield chatbot, history, '正常'

+ return

+

+ # 第3步:集合文件

+ import time, glob, os, shutil, re, openai

os.makedirs('gpt_log/generated_english_version', exist_ok=True)

os.makedirs('gpt_log/generated_english_version/crazy_functions', exist_ok=True)

file_manifest = [f for f in glob.glob('./*.py') if ('test_project' not in f) and ('gpt_log' not in f)] + \

[f for f in glob.glob('./crazy_functions/*.py') if ('test_project' not in f) and ('gpt_log' not in f)]

+ # file_manifest = ['./toolbox.py']

i_say_show_user_buffer = []

- # 随便显示点什么防止卡顿的感觉

+ # 第4步:随便显示点什么防止卡顿的感觉

for index, fp in enumerate(file_manifest):

# if 'test_project' in fp: continue

with open(fp, 'r', encoding='utf-8') as f:

file_content = f.read()

- i_say_show_user =f'[{index}/{len(file_manifest)}] 接下来请将以下代码中包含的所有中文转化为英文,只输出代码: {os.path.abspath(fp)}'

+ i_say_show_user =f'[{index}/{len(file_manifest)}] 接下来请将以下代码中包含的所有中文转化为英文,只输出转化后的英文代码,请用代码块输出代码: {os.path.abspath(fp)}'

i_say_show_user_buffer.append(i_say_show_user)

chatbot.append((i_say_show_user, "[Local Message] 等待多线程操作,中间过程不予显示."))

yield chatbot, history, '正常'

- # 任务函数

+

+ # 第5步:Token限制下的截断与处理

+ MAX_TOKEN = 2500

+ # from transformers import GPT2TokenizerFast

+ # print('加载tokenizer中')

+ # tokenizer = GPT2TokenizerFast.from_pretrained("gpt2")

+ # get_token_cnt = lambda txt: len(tokenizer(txt)["input_ids"])

+ # print('加载tokenizer结束')

+

+

+ # 第6步:任务函数

mutable_return = [None for _ in file_manifest]

+ observe_window = [[""] for _ in file_manifest]

def thread_worker(fp,index):

+ if index > 10:

+ time.sleep(60)

+ print('Openai 限制免费用户每分钟20次请求,降低请求频率中。')

with open(fp, 'r', encoding='utf-8') as f:

file_content = f.read()

- i_say = f'接下来请将以下代码中包含的所有中文转化为英文,只输出代码,文件名是{fp},文件代码是 ```{file_content}```'

- # ** gpt request **

- gpt_say = predict_no_ui_long_connection(inputs=i_say, top_p=top_p, temperature=temperature, history=history, sys_prompt=sys_prompt)

- mutable_return[index] = gpt_say

+ i_say_template = lambda fp, file_content: f'接下来请将以下代码中包含的所有中文转化为英文,只输出代码,文件名是{fp},文件代码是 ```{file_content}```'

+ try:

+ gpt_say = ""

+ # 分解代码文件

+ file_content_breakdown = breakdown_txt_to_satisfy_token_limit(file_content, MAX_TOKEN)

+ for file_content_partial in file_content_breakdown:

+ i_say = i_say_template(fp, file_content_partial)

+ # # ** gpt request **

+ gpt_say_partial = predict_no_ui_long_connection(inputs=i_say, top_p=top_p, temperature=temperature, history=[], sys_prompt=sys_prompt, observe_window=observe_window[index])

+ gpt_say_partial = extract_code_block_carefully(gpt_say_partial)

+ gpt_say += gpt_say_partial

+ mutable_return[index] = gpt_say

+ except ConnectionAbortedError as token_exceed_err:

+ print('至少一个线程任务Token溢出而失败', e)

+ except Exception as e:

+ print('至少一个线程任务意外失败', e)

- # 所有线程同时开始执行任务函数

+ # 第7步:所有线程同时开始执行任务函数

handles = [threading.Thread(target=thread_worker, args=(fp,index)) for index, fp in enumerate(file_manifest)]

for h in handles:

h.daemon = True

@@ -43,19 +128,23 @@ def 全项目切换英文(txt, top_p, temperature, chatbot, history, sys_prompt,

chatbot.append(('开始了吗?', f'多线程操作已经开始'))

yield chatbot, history, '正常'

- # 循环轮询各个线程是否执行完毕

+ # 第8步:循环轮询各个线程是否执行完毕

cnt = 0

while True:

- time.sleep(1)

+ cnt += 1

+ time.sleep(0.2)

th_alive = [h.is_alive() for h in handles]

if not any(th_alive): break

- stat = ['执行中' if alive else '已完成' for alive in th_alive]

- stat_str = '|'.join(stat)

- cnt += 1

- chatbot[-1] = (chatbot[-1][0], f'多线程操作已经开始,完成情况: {stat_str}' + ''.join(['.']*(cnt%4)))

+ # 更好的UI视觉效果

+ observe_win = []

+ for thread_index, alive in enumerate(th_alive):

+ observe_win.append("[ ..."+observe_window[thread_index][0][-60:].replace('\n','').replace('```','...').replace(' ','.').replace('ChatGPT 学术优化

""" +title_html = "ChatGPT 学术优化

" +description = """代码开源和更新[地址🚀](https://github.com/binary-husky/chatgpt_academic),感谢热情的[开发者们❤️](https://github.com/binary-husky/chatgpt_academic/graphs/contributors)""" # 问询记录, python 版本建议3.9+(越新越好) import logging @@ -78,12 +79,12 @@ with gr.Blocks(theme=set_theme, analytics_enabled=False, css=advanced_css) as de with gr.Row(): with gr.Accordion("点击展开“文件上传区”。上传本地文件可供红色函数插件调用。", open=False) as area_file_up: file_upload = gr.Files(label="任何文件, 但推荐上传压缩文件(zip, tar)", file_count="multiple") - with gr.Accordion("展开SysPrompt & GPT参数 & 交互界面布局", open=False): + with gr.Accordion("展开SysPrompt & 交互界面布局 & Github地址", open=False): system_prompt = gr.Textbox(show_label=True, placeholder=f"System Prompt", label="System prompt", value=initial_prompt) top_p = gr.Slider(minimum=-0, maximum=1.0, value=1.0, step=0.01,interactive=True, label="Top-p (nucleus sampling)",) temperature = gr.Slider(minimum=-0, maximum=2.0, value=1.0, step=0.01, interactive=True, label="Temperature",) checkboxes = gr.CheckboxGroup(["基础功能区", "函数插件区"], value=["基础功能区", "函数插件区"], label="显示/隐藏功能区") - + gr.Markdown(description) # 功能区显示开关与功能区的互动 def fn_area_visibility(a): ret = {} diff --git a/predict.py b/predict.py index 31a58613..f4c87cc1 100644 --- a/predict.py +++ b/predict.py @@ -186,14 +186,16 @@ def predict(inputs, top_p, temperature, chatbot=[], history=[], system_prompt='' error_msg = chunk.decode() if "reduce the length" in error_msg: chatbot[-1] = (chatbot[-1][0], "[Local Message] Input (or history) is too long, please reduce input or clear history by refreshing this page.") - history = [] + history = [] # 清除历史 elif "Incorrect API key" in error_msg: chatbot[-1] = (chatbot[-1][0], "[Local Message] Incorrect API key provided.") + elif "exceeded your current quota" in error_msg: + chatbot[-1] = (chatbot[-1][0], "[Local Message] You exceeded your current quota. OpenAI以账户额度不足为由,拒绝服务.") else: from toolbox import regular_txt_to_markdown - tb_str = regular_txt_to_markdown(traceback.format_exc()) - chatbot[-1] = (chatbot[-1][0], f"[Local Message] Json Error \n\n {tb_str} \n\n {regular_txt_to_markdown(chunk.decode()[4:])}") - yield chatbot, history, "Json解析不合常规" + error_msg + tb_str = '```\n' + traceback.format_exc() + '```' + chatbot[-1] = (chatbot[-1][0], f"[Local Message] 异常 \n\n{tb_str} \n\n{regular_txt_to_markdown(chunk.decode()[4:])}") + yield chatbot, history, "Json异常" + error_msg return def generate_payload(inputs, top_p, temperature, history, system_prompt, stream): diff --git a/toolbox.py b/toolbox.py index 7733d837..61faf7c7 100644 --- a/toolbox.py +++ b/toolbox.py @@ -115,8 +115,9 @@ def CatchException(f): from check_proxy import check_proxy from toolbox import get_conf proxies, = get_conf('proxies') - tb_str = regular_txt_to_markdown(traceback.format_exc()) - chatbot[-1] = (chatbot[-1][0], f"[Local Message] 实验性函数调用出错: \n\n {tb_str} \n\n 当前代理可用性: \n\n {check_proxy(proxies)}") + tb_str = '```\n' + traceback.format_exc() + '```' + if len(chatbot) == 0: chatbot.append(["插件调度异常","异常原因"]) + chatbot[-1] = (chatbot[-1][0], f"[Local Message] 实验性函数调用出错: \n\n{tb_str} \n\n当前代理可用性: \n\n{check_proxy(proxies)}") yield chatbot, history, f'异常 {e}' return decorated @@ -164,6 +165,23 @@ def markdown_convertion(txt): else: return pre + markdown.markdown(txt,extensions=['fenced_code','tables']) + suf +def close_up_code_segment_during_stream(gpt_reply): + """ + 在gpt输出代码的中途(输出了前面的```,但还没输出完后面的```),补上后面的``` + """ + if '```' not in gpt_reply: return gpt_reply + if gpt_reply.endswith('```'): return gpt_reply + + # 排除了以上两个情况,我们 + segments = gpt_reply.split('```') + n_mark = len(segments) - 1 + if n_mark % 2 == 1: + print('输出代码片段中!') + return gpt_reply+'\n```' + else: + return gpt_reply + + def format_io(self, y): """ @@ -172,6 +190,7 @@ def format_io(self, y): if y is None or y == []: return [] i_ask, gpt_reply = y[-1] i_ask = text_divide_paragraph(i_ask) # 输入部分太自由,预处理一波 + gpt_reply = close_up_code_segment_during_stream(gpt_reply) # 当代码输出半截的时候,试着补上后个``` y[-1] = ( None if i_ask is None else markdown.markdown(i_ask, extensions=['fenced_code','tables']), None if gpt_reply is None else markdown_convertion(gpt_reply) From 1d912bc10de08b249abfc00db328a33f7edfdd6b Mon Sep 17 00:00:00 2001 From: Your Name

@@ -204,6 +204,8 @@ input区域 输入 ./crazy_functions/test_project/python/dqn , 然后点击 "[

```

python check_proxy.py

```

+### 方法二:纯新手教程

+[纯新手教程](https://github.com/binary-husky/chatgpt_academic/wiki/%E4%BB%A3%E7%90%86%E8%BD%AF%E4%BB%B6%E9%97%AE%E9%A2%98%E7%9A%84%E6%96%B0%E6%89%8B%E8%A7%A3%E5%86%B3%E6%96%B9%E6%B3%95%EF%BC%88%E6%96%B9%E6%B3%95%E5%8F%AA%E9%80%82%E7%94%A8%E4%BA%8E%E6%96%B0%E6%89%8B%EF%BC%89)

## 兼容性测试

diff --git a/theme.py b/theme.py

index 06d377de..1a186aac 100644

--- a/theme.py

+++ b/theme.py

@@ -82,29 +82,35 @@ def adjust_theme():

return set_theme

advanced_css = """

+/* 设置表格的外边距为1em,内部单元格之间边框合并,空单元格显示. */

.markdown-body table {

margin: 1em 0;

border-collapse: collapse;

empty-cells: show;

}

+

+/* 设置表格单元格的内边距为5px,边框粗细为1.2px,颜色为--border-color-primary. */

.markdown-body th, .markdown-body td {

border: 1.2px solid var(--border-color-primary);

padding: 5px;

}

+

+/* 设置表头背景颜色为rgba(175,184,193,0.2),透明度为0.2. */

.markdown-body thead {

background-color: rgba(175,184,193,0.2);

}

+

+/* 设置表头单元格的内边距为0.5em和0.2em. */

.markdown-body thead th {

padding: .5em .2em;

}

-/* 以下 CSS 来自对 https://github.com/GaiZhenbiao/ChuanhuChatGPT 的移植。*/

-

-/* list */

+/* 去掉列表前缀的默认间距,使其与文本线对齐. */

.markdown-body ol, .markdown-body ul {

padding-inline-start: 2em !important;

}

-/* 对话气泡 */

+

+/* 设定聊天气泡的样式,包括圆角、最大宽度和阴影等. */

[class *= "message"] {

border-radius: var(--radius-xl) !important;

/* padding: var(--spacing-xl) !important; */

@@ -123,7 +129,8 @@ advanced_css = """

/* width: auto !important; */

border-bottom-right-radius: 0 !important;

}

-/* 行内代码 */

+

+/* 行内代码的背景设为淡灰色,设定圆角和间距. */

.markdown-body code {

display: inline;

white-space: break-spaces;

@@ -132,7 +139,7 @@ advanced_css = """

padding: .2em .4em .1em .4em;

background-color: rgba(175,184,193,0.2);

}

-/* 代码块 */

+/* 设定代码块的样式,包括背景颜色、内、外边距、圆角。 */

.markdown-body pre code {

display: block;

overflow: auto;

From 10cf456aa81fc1dc0f4718ed8b7a08ce9b1e3d97 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 21:27:19 +0800

Subject: [PATCH 082/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index f670dc13..b9721c16 100644

--- a/README.md

+++ b/README.md

@@ -22,7 +22,7 @@ https://github.com/polarwinkel/mdtex2html

>

> 1.请注意只有“红颜色”标识的函数插件(按钮)才支持读取文件。目前暂不能完善地支持pdf/word格式文献的翻译解读,相关函数函件正在测试中。

>

-> 2.本项目中每个文件的功能都在自译解[`project_self_analysis.md`](https://github.com/binary-husky/chatgpt_academic/wiki/chatgpt-academic%E9%A1%B9%E7%9B%AE%E8%87%AA%E8%AF%91%E8%A7%A3%E6%8A%A5%E5%91%8A)详细说明。随着版本的迭代,您也可以随时自行点击相关函数插件,调用GPT重新生成项目的自我解析报告。常见问题汇总在[`wiki`](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98)当中。

+> 2.本项目中每个文件的功能都在自译解[`self_analysis.md`](https://github.com/binary-husky/chatgpt_academic/wiki/chatgpt-academic%E9%A1%B9%E7%9B%AE%E8%87%AA%E8%AF%91%E8%A7%A3%E6%8A%A5%E5%91%8A)详细说明。随着版本的迭代,您也可以随时自行点击相关函数插件,调用GPT重新生成项目的自我解析报告。常见问题汇总在[`wiki`](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98)当中。

>

> 3.如果您不太习惯部分中文命名的函数,您可以随时点击相关函数插件,调用GPT一键生成纯英文的项目源代码。

From b188c4a2b5cd5a8de84176f07137d73ac5163e46 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 21:28:59 +0800

Subject: [PATCH 083/154] Update README.md

---

README.md | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/README.md b/README.md

index b9721c16..86bebb83 100644

--- a/README.md

+++ b/README.md

@@ -2,9 +2,9 @@

# ChatGPT 学术优化

-**如果喜欢这个项目,请给它一个Star;如果你发明了更好用的学术快捷键,欢迎发issue或者pull requests(dev分支)**

+**如果喜欢这个项目,请给它一个Star;如果你发明了更好用的快捷键或函数插件,欢迎发issue或者pull requests(dev分支)**

-If you like this project, please give it a Star. If you've come up with more useful academic shortcuts, feel free to open an issue or pull request (to `dev` branch).

+If you like this project, please give it a Star. If you've come up with more useful academic shortcuts or functional plugins, feel free to open an issue or pull request (to `dev` branch).

```

代码中参考了很多其他优秀项目中的设计,主要包括:

From 8d086ce7c0469af7749e3bbbc81827015b3e5ac0 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 21:31:44 +0800

Subject: [PATCH 084/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 86bebb83..8424cecd 100644

--- a/README.md

+++ b/README.md

@@ -20,7 +20,7 @@ https://github.com/polarwinkel/mdtex2html

> **Note**

>

-> 1.请注意只有“红颜色”标识的函数插件(按钮)才支持读取文件。目前暂不能完善地支持pdf/word格式文献的翻译解读,相关函数函件正在测试中。

+> 1.请注意只有“红颜色”标识的函数插件(按钮)才支持读取文件。目前对pdf/word格式文件的支持插件正在逐步完善中,需要更多developer的帮助。

>

> 2.本项目中每个文件的功能都在自译解[`self_analysis.md`](https://github.com/binary-husky/chatgpt_academic/wiki/chatgpt-academic%E9%A1%B9%E7%9B%AE%E8%87%AA%E8%AF%91%E8%A7%A3%E6%8A%A5%E5%91%8A)详细说明。随着版本的迭代,您也可以随时自行点击相关函数插件,调用GPT重新生成项目的自我解析报告。常见问题汇总在[`wiki`](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98)当中。

>

From 5f7a1a3da390d807280be6b06a689ce85ee59772 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 21:33:09 +0800

Subject: [PATCH 085/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 8424cecd..8b601314 100644

--- a/README.md

+++ b/README.md

@@ -24,7 +24,7 @@ https://github.com/polarwinkel/mdtex2html

>

> 2.本项目中每个文件的功能都在自译解[`self_analysis.md`](https://github.com/binary-husky/chatgpt_academic/wiki/chatgpt-academic%E9%A1%B9%E7%9B%AE%E8%87%AA%E8%AF%91%E8%A7%A3%E6%8A%A5%E5%91%8A)详细说明。随着版本的迭代,您也可以随时自行点击相关函数插件,调用GPT重新生成项目的自我解析报告。常见问题汇总在[`wiki`](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%B8%B8%E8%A7%81%E9%97%AE%E9%A2%98)当中。

>

-> 3.如果您不太习惯部分中文命名的函数,您可以随时点击相关函数插件,调用GPT一键生成纯英文的项目源代码。

+> 3.如果您不太习惯部分中文命名的函数、注释或者界面,您可以随时点击相关函数插件,调用ChatGPT一键生成纯英文的项目源代码。

From 9ccc53fa9657da9b498d171b6bbb8a8465c638e1 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Mon, 3 Apr 2023 01:39:17 +0800

Subject: [PATCH 096/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 84255244..1deff679 100644

--- a/README.md

+++ b/README.md

@@ -46,7 +46,7 @@ arxiv小助手 | [函数插件] 输入arxiv文章url即可一键翻译摘要+下

图片显示 | 可以在markdown中显示图片

多线程函数插件支持 | 支持多线调用chatgpt,一键处理海量文本或程序

支持GPT输出的markdown表格 | 可以输出支持GPT的markdown表格

-huggingface免科学上网在线体验 | 登陆huggingface后复制[此空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

+huggingface免科学上网[在线体验](https://huggingface.co/spaces/qingxu98/gpt-academic) | 登陆huggingface后复制[此空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

…… | ……

From 3486fb5c102f4f7a29f196e3b2c23666554bafbb Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 21:39:28 +0800

Subject: [PATCH 086/154] Update README.md

---

README.md | 4 +++-

1 file changed, 3 insertions(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 8b601314..28d4d3cf 100644

--- a/README.md

+++ b/README.md

@@ -37,12 +37,14 @@ https://github.com/polarwinkel/mdtex2html

配置代理服务器 | 支持配置代理服务器

模块化设计 | 支持自定义高阶的实验性功能

自我程序剖析 | [实验性功能] 一键读懂本项目的源代码

-程序剖析 | [实验性功能] 一键可以剖析其他Python/C++项目

+程序剖析 | [实验性功能] 一键可以剖析其他Python/C/C++/Java项目树

读论文 | [实验性功能] 一键解读latex论文全文并生成摘要

批量注释生成 | [实验性功能] 一键批量生成函数注释

chat分析报告生成 | [实验性功能] 运行后自动生成总结汇报

公式显示 | 可以同时显示公式的tex形式和渲染形式

+arxiv小助手 | 输入arxiv文章url即可一键翻译摘要+下载PDF

图片显示 | 可以在markdown中显示图片

+多线程函数插件支持 | 支持多线调用chatgpt,一键处理海量文本或程序

支持GPT输出的markdown表格 | 可以输出支持GPT的markdown表格

…… | ……

From 6105b7f73b84a043bbf242c172af64db71202bcf Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 21:41:36 +0800

Subject: [PATCH 087/154] Update README.md

---

README.md | 12 ++++++------

1 file changed, 6 insertions(+), 6 deletions(-)

diff --git a/README.md b/README.md

index 28d4d3cf..0bfd7104 100644

--- a/README.md

+++ b/README.md

@@ -36,13 +36,13 @@ https://github.com/polarwinkel/mdtex2html

自定义快捷键 | 支持自定义快捷键

配置代理服务器 | 支持配置代理服务器

模块化设计 | 支持自定义高阶的实验性功能

-自我程序剖析 | [实验性功能] 一键读懂本项目的源代码

-程序剖析 | [实验性功能] 一键可以剖析其他Python/C/C++/Java项目树

-读论文 | [实验性功能] 一键解读latex论文全文并生成摘要

-批量注释生成 | [实验性功能] 一键批量生成函数注释

-chat分析报告生成 | [实验性功能] 运行后自动生成总结汇报

+自我程序剖析 | [函数插件] 一键读懂本项目的源代码

+程序剖析 | [函数插件] 一键可以剖析其他Python/C/C++/Java项目树

+读论文 | [函数插件] 一键解读latex论文全文并生成摘要

+批量注释生成 | [函数插件] 一键批量生成函数注释

+chat分析报告生成 | [函数插件] 运行后自动生成总结汇报

+arxiv小助手 | [函数插件] 输入arxiv文章url即可一键翻译摘要+下载PDF

公式显示 | 可以同时显示公式的tex形式和渲染形式

-arxiv小助手 | 输入arxiv文章url即可一键翻译摘要+下载PDF

图片显示 | 可以在markdown中显示图片

多线程函数插件支持 | 支持多线调用chatgpt,一键处理海量文本或程序

支持GPT输出的markdown表格 | 可以输出支持GPT的markdown表格

From a562849e4c6f7c1bd957ede31869d18b5c5a731d Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 21:44:44 +0800

Subject: [PATCH 088/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 0bfd7104..8d3d7855 100644

--- a/README.md

+++ b/README.md

@@ -35,7 +35,7 @@ https://github.com/polarwinkel/mdtex2html

一键代码解释 | 可以正确显示代码、解释代码

自定义快捷键 | 支持自定义快捷键

配置代理服务器 | 支持配置代理服务器

-模块化设计 | 支持自定义高阶的实验性功能

+模块化设计 | 支持自定义高阶的实验性功能与[函数插件],插件支持[热更新](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6PR%E7%9A%84%E5%B0%8F%E5%B0%8F%E5%BB%BA%E8%AE%AE)

自我程序剖析 | [函数插件] 一键读懂本项目的源代码

程序剖析 | [函数插件] 一键可以剖析其他Python/C/C++/Java项目树

读论文 | [函数插件] 一键解读latex论文全文并生成摘要

From f4905a60e22e948cdd0a35ffdc011844266bafa3 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 21:51:41 +0800

Subject: [PATCH 089/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 8d3d7855..82d908ac 100644

--- a/README.md

+++ b/README.md

@@ -35,7 +35,7 @@ https://github.com/polarwinkel/mdtex2html

一键代码解释 | 可以正确显示代码、解释代码

自定义快捷键 | 支持自定义快捷键

配置代理服务器 | 支持配置代理服务器

-模块化设计 | 支持自定义高阶的实验性功能与[函数插件],插件支持[热更新](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6PR%E7%9A%84%E5%B0%8F%E5%B0%8F%E5%BB%BA%E8%AE%AE)

+模块化设计 | 支持自定义高阶的实验性功能与[函数插件],插件支持[热更新](https://github.com/binary-husky/chatgpt_academic/wiki/%E5%87%BD%E6%95%B0%E6%8F%92%E4%BB%B6%E6%8C%87%E5%8D%97)

自我程序剖析 | [函数插件] 一键读懂本项目的源代码

程序剖析 | [函数插件] 一键可以剖析其他Python/C/C++/Java项目树

读论文 | [函数插件] 一键解读latex论文全文并生成摘要

From a74f0a934370b5621779217da89a4260ca6f39d6 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 22:02:41 +0800

Subject: [PATCH 090/154] Update config.py

---

config.py | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/config.py b/config.py

index a3c634f1..d986750a 100644

--- a/config.py

+++ b/config.py

@@ -42,5 +42,5 @@ API_URL = "https://api.openai.com/v1/chat/completions"

# 设置并行使用的线程数

CONCURRENT_COUNT = 100

-# 设置用户名和密码

+# 设置用户名和密码(相关功能不稳定,与gradio版本和网络都相关,如果本地使用不建议加这个)

AUTHENTICATION = [] # [("username", "password"), ("username2", "password2"), ...]

From c59eb8ff9e2e701582d018b667090f5f2d1fa72e Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 22:04:33 +0800

Subject: [PATCH 091/154] Update README.md

---

request_llm/README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/request_llm/README.md b/request_llm/README.md

index a539f1f2..c66cc15c 100644

--- a/request_llm/README.md

+++ b/request_llm/README.md

@@ -1,4 +1,4 @@

-# 如何使用其他大语言模型

+# 如何使用其他大语言模型(dev分支测试中)

## 1. 先运行text-generation

``` sh

From fff7b8ef91ebf42af6da32f71962691808d5ea29 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Sun, 2 Apr 2023 22:15:12 +0800

Subject: [PATCH 092/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index 82d908ac..21435b23 100644

--- a/README.md

+++ b/README.md

@@ -259,5 +259,5 @@ python check_proxy.py

- (Top Priority) 调用另一个开源项目text-generation-webui的web接口,使用其他llm模型

- 总结大工程源代码时,文本过长、token溢出的问题(目前的方法是直接二分丢弃处理溢出,过于粗暴,有效信息大量丢失)

-- UI不够美观

+

From d51ae6abb229cbac421bb22850655d67e956f08c Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Mon, 3 Apr 2023 01:01:57 +0800

Subject: [PATCH 093/154] Update README.md

---

README.md | 3 +++

1 file changed, 3 insertions(+)

diff --git a/README.md b/README.md

index 21435b23..e885499c 100644

--- a/README.md

+++ b/README.md

@@ -46,6 +46,9 @@ arxiv小助手 | [函数插件] 输入arxiv文章url即可一键翻译摘要+下

图片显示 | 可以在markdown中显示图片

多线程函数插件支持 | 支持多线调用chatgpt,一键处理海量文本或程序

支持GPT输出的markdown表格 | 可以输出支持GPT的markdown表格

+huggingface免科学上网在线体验 | 登陆huggingface后复制一下[空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

+

+

…… | ……

From 417c8325deae820b05a350a8469f8d3e0352dd9f Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Mon, 3 Apr 2023 01:03:00 +0800

Subject: [PATCH 094/154] Update README.md

---

README.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/README.md b/README.md

index e885499c..e1e6ece6 100644

--- a/README.md

+++ b/README.md

@@ -46,7 +46,7 @@ arxiv小助手 | [函数插件] 输入arxiv文章url即可一键翻译摘要+下

图片显示 | 可以在markdown中显示图片

多线程函数插件支持 | 支持多线调用chatgpt,一键处理海量文本或程序

支持GPT输出的markdown表格 | 可以输出支持GPT的markdown表格

-huggingface免科学上网在线体验 | 登陆huggingface后复制一下[空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

+huggingface免科学上网在线体验 | 登陆huggingface后复制[此空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

…… | ……

From 4b83486b3dc7a12fd8d699660c002bd4b1e5640e Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Mon, 3 Apr 2023 01:38:44 +0800

Subject: [PATCH 095/154] Update README.md

---

README.md | 2 --

1 file changed, 2 deletions(-)

diff --git a/README.md b/README.md

index e1e6ece6..84255244 100644

--- a/README.md

+++ b/README.md

@@ -47,8 +47,6 @@ arxiv小助手 | [函数插件] 输入arxiv文章url即可一键翻译摘要+下

多线程函数插件支持 | 支持多线调用chatgpt,一键处理海量文本或程序

支持GPT输出的markdown表格 | 可以输出支持GPT的markdown表格

huggingface免科学上网在线体验 | 登陆huggingface后复制[此空间](https://huggingface.co/spaces/qingxu98/gpt-academic)

-

-

…… | ……

','.....').replace('$','.')+"... ]") + stat = [f'执行中: {obs}\n\n' if alive else '已完成\n\n' for alive, obs in zip(th_alive, observe_win)] + stat_str = ''.join(stat) + chatbot[-1] = (chatbot[-1][0], f'多线程操作已经开始,完成情况: \n\n{stat_str}' + ''.join(['.']*(cnt%10+1))) yield chatbot, history, '正常' - # 把结果写入文件 + # 第9步:把结果写入文件 for index, h in enumerate(handles): h.join() # 这里其实不需要join了,肯定已经都结束了 fp = file_manifest[index] @@ -63,13 +152,17 @@ def 全项目切换英文(txt, top_p, temperature, chatbot, history, sys_prompt, i_say_show_user = i_say_show_user_buffer[index] where_to_relocate = f'gpt_log/generated_english_version/{fp}' - with open(where_to_relocate, 'w+', encoding='utf-8') as f: f.write(gpt_say.lstrip('```').rstrip('```')) + if gpt_say is not None: + with open(where_to_relocate, 'w+', encoding='utf-8') as f: + f.write(gpt_say) + else: # 失败 + shutil.copyfile(file_manifest[index], where_to_relocate) chatbot.append((i_say_show_user, f'[Local Message] 已完成{os.path.abspath(fp)}的转化,\n\n存入{os.path.abspath(where_to_relocate)}')) history.append(i_say_show_user); history.append(gpt_say) yield chatbot, history, '正常' time.sleep(1) - # 备份一个文件 + # 第10步:备份一个文件 res = write_results_to_file(history) chatbot.append(("生成一份任务执行报告", res)) yield chatbot, history, '正常' diff --git a/predict.py b/predict.py index f4c87cc1..2a1ef4d8 100644 --- a/predict.py +++ b/predict.py @@ -71,9 +71,10 @@ def predict_no_ui(inputs, top_p, temperature, history=[], sys_prompt=""): raise ConnectionAbortedError("Json解析不合常规,可能是文本过长" + response.text) -def predict_no_ui_long_connection(inputs, top_p, temperature, history=[], sys_prompt=""): +def predict_no_ui_long_connection(inputs, top_p, temperature, history=[], sys_prompt="", observe_window=None): """ 发送至chatGPT,等待回复,一次性完成,不显示中间过程。但内部用stream的方法避免有人中途掐网线。 + observe_window:用于负责跨越线程传递已经输出的部分,大部分时候仅仅为了fancy的视觉效果,留空即可 """ headers, payload = generate_payload(inputs, top_p, temperature, history, system_prompt=sys_prompt, stream=True) @@ -105,7 +106,10 @@ def predict_no_ui_long_connection(inputs, top_p, temperature, history=[], sys_pr delta = json_data["delta"] if len(delta) == 0: break if "role" in delta: continue - if "content" in delta: result += delta["content"]; print(delta["content"], end='') + if "content" in delta: + result += delta["content"] + print(delta["content"], end='') + if observe_window is not None: observe_window[0] += delta["content"] else: raise RuntimeError("意外Json结构:"+delta) if json_data['finish_reason'] == 'length': raise ConnectionAbortedError("正常结束,但显示Token不足。") diff --git a/requirements.txt b/requirements.txt index d71b4984..bdafbe33 100644 --- a/requirements.txt +++ b/requirements.txt @@ -3,3 +3,5 @@ requests[socks] mdtex2html Markdown latex2mathml +openai +transformers From 5b8cc5a8998644b0d6cbb5f557e295fb568fef4d Mon Sep 17 00:00:00 2001 From: binary-husky <96192199+binary-husky@users.noreply.github.com> Date: Tue, 4 Apr 2023 15:33:53 +0800 Subject: [PATCH 104/154] Update README.md --- README.md | 7 +++++++ 1 file changed, 7 insertions(+) diff --git a/README.md b/README.md index cf8dda34..7542bae0 100644 --- a/README.md +++ b/README.md @@ -257,6 +257,13 @@ python check_proxy.py

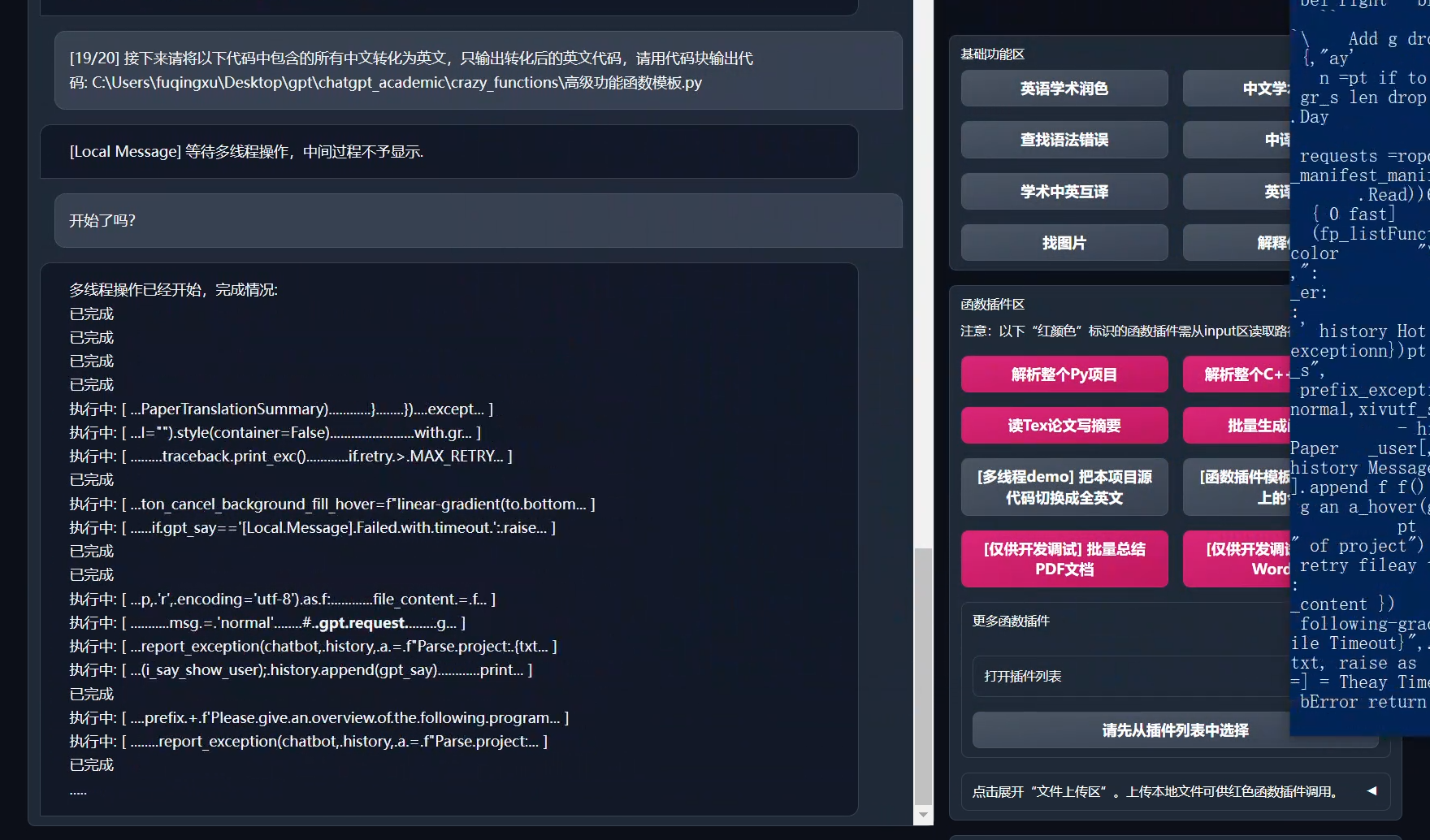

+

+### 源代码转译英文

+

+

+

+### 源代码转译英文

+

+

+ +

+

+

## Todo:

- (Top Priority) 调用另一个开源项目text-generation-webui的web接口,使用其他llm模型

From 005232afa6228c821e05099e9601da58722dc8da Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Tue, 4 Apr 2023 17:13:40 +0800

Subject: [PATCH 105/154] Update issue templates

---

.github/ISSUE_TEMPLATE/bug_report.md | 5 +++++

1 file changed, 5 insertions(+)

diff --git a/.github/ISSUE_TEMPLATE/bug_report.md b/.github/ISSUE_TEMPLATE/bug_report.md

index 9ab88316..ba11763d 100644

--- a/.github/ISSUE_TEMPLATE/bug_report.md

+++ b/.github/ISSUE_TEMPLATE/bug_report.md

@@ -12,3 +12,8 @@ assignees: ''

**Screen Shot 截图**

**Terminal Traceback 终端traceback(如果有)**

+

+

+Before submitting an issue 提交issue之前:

+- Please try to upgrade your code. 如果您的代码不是最新的,建议您先尝试更新代码

+- Please check project wiki for common problem solutions.项目[wiki](https://github.com/binary-husky/chatgpt_academic/wiki)有一些常见问题的解决方法

From c9fa26405d4f98b4fea46a7570177e02f24b4518 Mon Sep 17 00:00:00 2001

From: qingxu fu <505030475@qq.com>

Date: Tue, 4 Apr 2023 21:38:20 +0800

Subject: [PATCH 106/154] =?UTF-8?q?=E8=A7=84=E5=88=92=E7=89=88=E6=9C=AC?=

=?UTF-8?q?=E5=8F=B7?=

MIME-Version: 1.0

Content-Type: text/plain; charset=UTF-8

Content-Transfer-Encoding: 8bit

---

README.md | 18 +++++++++++++-----

version | 1 +

2 files changed, 14 insertions(+), 5 deletions(-)

create mode 100644 version

diff --git a/README.md b/README.md

index 7542bae0..eef79e5a 100644

--- a/README.md

+++ b/README.md

@@ -264,9 +264,17 @@ python check_proxy.py

+

+ -## Todo:

-

-- (Top Priority) 调用另一个开源项目text-generation-webui的web接口,使用其他llm模型

-- 总结大工程源代码时,文本过长、token溢出的问题(目前的方法是直接二分丢弃处理溢出,过于粗暴,有效信息大量丢失)

-

+## Todo 与 版本规划:

+- version 3 (Todo):

+- - 支持gpt4和其他更多llm

+- version 2.3+ (Todo):

+- - 总结大工程源代码时文本过长、token溢出的问题

+- - 实现项目打包部署

+- - 函数插件参数接口优化

+- - 自更新

+- version 2.3: 增强多线程交互性

+- version 2.2: 函数插件支持热重载

+- version 2.1: 可折叠式布局

+- version 2.0: 引入模块化函数插件

+- version 1.0: 基础功能

\ No newline at end of file

diff --git a/version b/version

new file mode 100644

index 00000000..c0943d3e

--- /dev/null

+++ b/version

@@ -0,0 +1 @@

+2.3

\ No newline at end of file

From 99817e904012727a364fe0ff10a1a0f0ea7557fa Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Tue, 4 Apr 2023 22:17:47 +0800

Subject: [PATCH 107/154] Update version

---

version | 6 +++++-

1 file changed, 5 insertions(+), 1 deletion(-)

diff --git a/version b/version

index c0943d3e..21ab827c 100644

--- a/version

+++ b/version

@@ -1 +1,5 @@

-2.3

\ No newline at end of file

+{

+ 'version': 2.3,

+ 'show_feature': false,

+ 'new_feature': "修复多线程插件Bug;加入版本检查功能。",

+}

From 7b75422c2646acd111c8025ae11c5daed5ec4804 Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Tue, 4 Apr 2023 22:20:21 +0800

Subject: [PATCH 108/154] Update version

---

version | 6 +++---

1 file changed, 3 insertions(+), 3 deletions(-)

diff --git a/version b/version

index 21ab827c..f799a3f4 100644

--- a/version

+++ b/version

@@ -1,5 +1,5 @@

{

- 'version': 2.3,

- 'show_feature': false,

- 'new_feature': "修复多线程插件Bug;加入版本检查功能。",

+ "version": 2.3,

+ "show_feature": false,

+ "new_feature": "修复多线程插件Bug;加入版本检查功能。",

}

From 1042d28e1fb4dcdb86510cb4902f3d966e81e54a Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Tue, 4 Apr 2023 22:20:39 +0800

Subject: [PATCH 109/154] Update version

---

version | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/version b/version

index f799a3f4..436307a6 100644

--- a/version

+++ b/version

@@ -1,5 +1,5 @@

{

"version": 2.3,

"show_feature": false,

- "new_feature": "修复多线程插件Bug;加入版本检查功能。",

+ "new_feature": "修复多线程插件Bug;加入版本检查功能。"

}

From a239abac501244b202cf1b2dfd73d9266b7bbe5d Mon Sep 17 00:00:00 2001

From: binary-husky <96192199+binary-husky@users.noreply.github.com>

Date: Tue, 4 Apr 2023 22:34:28 +0800

Subject: [PATCH 110/154] Update version

---

version | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/version b/version

index 436307a6..ce7c84e1 100644

--- a/version

+++ b/version

@@ -1,5 +1,5 @@

{

"version": 2.3,

- "show_feature": false,

+ "show_feature": true,

"new_feature": "修复多线程插件Bug;加入版本检查功能。"

}

From c40f6f00bbb3776a8f4b356ce738b4b925b1af2e Mon Sep 17 00:00:00 2001

From: qingxu fu <505030475@qq.com>

Date: Tue, 4 Apr 2023 22:54:08 +0800

Subject: [PATCH 111/154] check_new_version

---

check_proxy.py | 24 ++++++++++++++++++++++++

main.py | 10 ++++++++--

2 files changed, 32 insertions(+), 2 deletions(-)

diff --git a/check_proxy.py b/check_proxy.py

index a6919dd3..abc75d04 100644

--- a/check_proxy.py

+++ b/check_proxy.py

@@ -19,6 +19,30 @@ def check_proxy(proxies):

return result

+def auto_update():

+ from toolbox import get_conf

+ import requests, time, json

+ proxies, = get_conf('proxies')

+ response = requests.get("https://raw.githubusercontent.com/binary-husky/chatgpt_academic/master/version",

+ proxies=proxies, timeout=1)

+ remote_json_data = json.loads(response.text)

+ remote_version = remote_json_data['version']

+ if remote_json_data["show_feature"]:

+ new_feature = "新功能:" + remote_json_data["new_feature"]

+ else:

+ new_feature = ""

+ with open('./version', 'r', encoding='utf8') as f:

+ current_version = f.read()

+ current_version = json.loads(current_version)['version']

+ if (remote_version - current_version) >= 0.05:

+ print(f'\n新版本可用。新版本:{remote_version},当前版本:{current_version}。{new_feature}')

+ print('Github更新地址:\nhttps://github.com/binary-husky/chatgpt_academic\n')

+ time.sleep(3)

+ return

+ else:

+ return

+

+

if __name__ == '__main__':

import os; os.environ['no_proxy'] = '*' # 避免代理网络产生意外污染

from toolbox import get_conf

diff --git a/main.py b/main.py

index 973d16fb..48ea670f 100644

--- a/main.py

+++ b/main.py

@@ -37,6 +37,11 @@ gr.Chatbot.postprocess = format_io

from theme import adjust_theme, advanced_css

set_theme = adjust_theme()

+# 代理与自动更新

+from check_proxy import check_proxy, auto_update

+proxy_info = check_proxy(proxies)

+

+

cancel_handles = []

with gr.Blocks(theme=set_theme, analytics_enabled=False, css=advanced_css) as demo:

gr.HTML(title_html)

@@ -54,8 +59,7 @@ with gr.Blocks(theme=set_theme, analytics_enabled=False, css=advanced_css) as de

resetBtn = gr.Button("重置", variant="secondary"); resetBtn.style(size="sm")

stopBtn = gr.Button("停止", variant="secondary"); stopBtn.style(size="sm")

with gr.Row():

- from check_proxy import check_proxy

- status = gr.Markdown(f"Tip: 按Enter提交, 按Shift+Enter换行。当前模型: {LLM_MODEL} \n {check_proxy(proxies)}")

+ status = gr.Markdown(f"Tip: 按Enter提交, 按Shift+Enter换行。当前模型: {LLM_MODEL} \n {proxy_info}")

with gr.Accordion("基础功能区", open=True) as area_basic_fn:

with gr.Row():

for k in functional:

@@ -139,6 +143,8 @@ def auto_opentab_delay():

print(f"\t(暗色主体): http://localhost:{PORT}/?__dark-theme=true")

def open():

time.sleep(2)

+ try: auto_update() # 检查新版本

+ except: pass

webbrowser.open_new_tab(f"http://localhost:{PORT}/?__dark-theme=true")

threading.Thread(target=open, name="open-browser", daemon=True).start()

From 1da60b7a0c43f56a3b25566156a049c46527b1db Mon Sep 17 00:00:00 2001

From: qingxu fu <505030475@qq.com>

Date: Tue, 4 Apr 2023 22:56:06 +0800

Subject: [PATCH 112/154] merge

---

main.py | 19 +++++++++++--------

toolbox.py | 16 ++++++++++++++--

2 files changed, 25 insertions(+), 10 deletions(-)

diff --git a/main.py b/main.py

index 48ea670f..123374e0 100644

--- a/main.py

+++ b/main.py

@@ -1,7 +1,7 @@

import os; os.environ['no_proxy'] = '*' # 避免代理网络产生意外污染

import gradio as gr

from predict import predict

-from toolbox import format_io, find_free_port, on_file_uploaded, on_report_generated, get_conf

+from toolbox import format_io, find_free_port, on_file_uploaded, on_report_generated, get_conf, ArgsGeneralWrapper

# 建议您复制一个config_private.py放自己的秘密, 如API和代理网址, 避免不小心传github被别人看到

proxies, WEB_PORT, LLM_MODEL, CONCURRENT_COUNT, AUTHENTICATION, CHATBOT_HEIGHT = \

@@ -87,8 +87,12 @@ with gr.Blocks(theme=set_theme, analytics_enabled=False, css=advanced_css) as de

system_prompt = gr.Textbox(show_label=True, placeholder=f"System Prompt", label="System prompt", value=initial_prompt)

top_p = gr.Slider(minimum=-0, maximum=1.0, value=1.0, step=0.01,interactive=True, label="Top-p (nucleus sampling)",)

temperature = gr.Slider(minimum=-0, maximum=2.0, value=1.0, step=0.01, interactive=True, label="Temperature",)

- checkboxes = gr.CheckboxGroup(["基础功能区", "函数插件区"], value=["基础功能区", "函数插件区"], label="显示/隐藏功能区")

+ checkboxes = gr.CheckboxGroup(["基础功能区", "函数插件区", "输入区2"], value=["基础功能区", "函数插件区"], label="显示/隐藏功能区")

gr.Markdown(description)

+ with gr.Accordion("输入区", open=True, visible=False) as input_crazy_fn:

+ with gr.Row():

+ txt2 = gr.Textbox(show_label=False, placeholder="Input question here.", label="输入区2").style(container=False)

+

# 功能区显示开关与功能区的互动

def fn_area_visibility(a):

ret = {}

@@ -97,17 +101,16 @@ with gr.Blocks(theme=set_theme, analytics_enabled=False, css=advanced_css) as de

return ret

checkboxes.select(fn_area_visibility, [checkboxes], [area_basic_fn, area_crazy_fn] )

# 整理反复出现的控件句柄组合

- input_combo = [txt, top_p, temperature, chatbot, history, system_prompt]

+ input_combo = [txt, txt2, top_p, temperature, chatbot, history, system_prompt]

output_combo = [chatbot, history, status]

- predict_args = dict(fn=predict, inputs=input_combo, outputs=output_combo)

- empty_txt_args = dict(fn=lambda: "", inputs=[], outputs=[txt]) # 用于在提交后清空输入栏

+ predict_args = dict(fn=ArgsGeneralWrapper(predict), inputs=input_combo, outputs=output_combo)

# 提交按钮、重置按钮

- cancel_handles.append(txt.submit(**predict_args)) #; txt.submit(**empty_txt_args) 在提交后清空输入栏

- cancel_handles.append(submitBtn.click(**predict_args)) #; submitBtn.click(**empty_txt_args) 在提交后清空输入栏

+ cancel_handles.append(txt.submit(**predict_args))

+ cancel_handles.append(submitBtn.click(**predict_args))

resetBtn.click(lambda: ([], [], "已重置"), None, output_combo)

# 基础功能区的回调函数注册

for k in functional:

- click_handle = functional[k]["Button"].click(predict, [*input_combo, gr.State(True), gr.State(k)], output_combo)

+ click_handle = functional[k]["Button"].click(fn=ArgsGeneralWrapper(predict), inputs=[*input_combo, gr.State(True), gr.State(k)], outputs=output_combo)

cancel_handles.append(click_handle)

# 文件上传区,接收文件后与chatbot的互动

file_upload.upload(on_file_uploaded, [file_upload, chatbot, txt], [chatbot, txt])

diff --git a/toolbox.py b/toolbox.py

index c55a48e5..00bb03e0 100644

--- a/toolbox.py

+++ b/toolbox.py

@@ -2,6 +2,18 @@ import markdown, mdtex2html, threading, importlib, traceback, importlib, inspect

from show_math import convert as convert_math

from functools import wraps, lru_cache

+def ArgsGeneralWrapper(f):

+ """

+ 装饰器函数,用于重组输入参数,改变输入参数的顺序与结构。

+ """

+ def decorated(txt, txt2, top_p, temperature, chatbot, history, system_prompt, *args, **kwargs):

+ txt_passon = txt

+ if txt == "" and txt2 != "": txt_passon = txt2

+ yield from f(txt_passon, top_p, temperature, chatbot, history, system_prompt, *args, **kwargs)

+

+ return decorated

+

+

def get_reduce_token_percent(text):

try:

# text = "maximum context length is 4097 tokens. However, your messages resulted in 4870 tokens"

@@ -116,7 +128,7 @@ def CatchException(f):

from toolbox import get_conf

proxies, = get_conf('proxies')

tb_str = '```\n' + traceback.format_exc() + '```'

- if len(chatbot) == 0: chatbot.append(["插件调度异常","异常原因"])

+ if chatbot is None or len(chatbot) == 0: chatbot = [["插件调度异常","异常原因"]]

chatbot[-1] = (chatbot[-1][0], f"[Local Message] 实验性函数调用出错: \n\n{tb_str} \n\n当前代理可用性: \n\n{check_proxy(proxies)}")

yield chatbot, history, f'异常 {e}'

return decorated

@@ -129,7 +141,7 @@ def HotReload(f):

def decorated(*args, **kwargs):

fn_name = f.__name__

f_hot_reload = getattr(importlib.reload(inspect.getmodule(f)), fn_name)